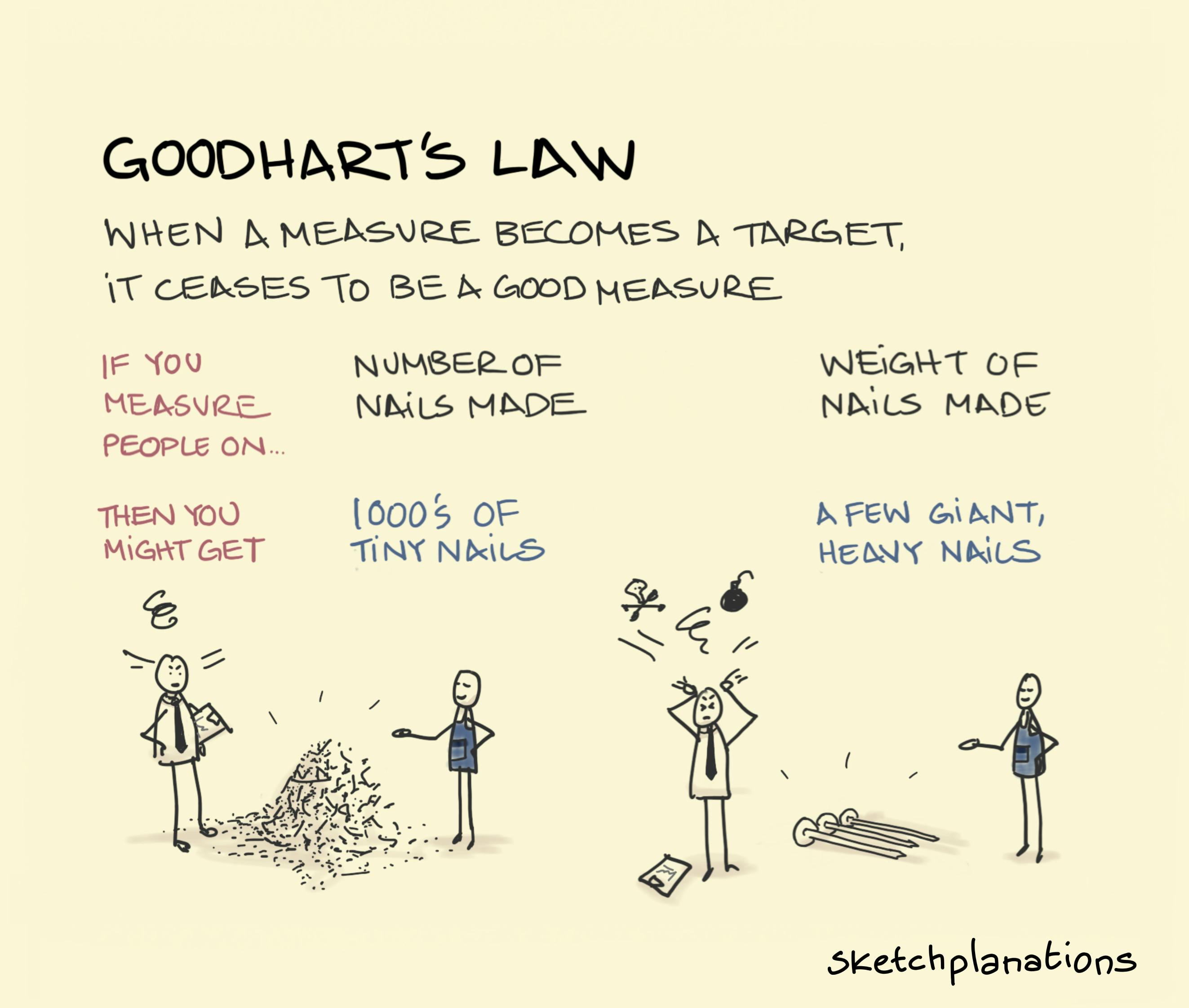

Goodhart’s Law: when a measure becomes a target, it ceases to be a good measure.

👇 Get new sketches each week

Goodhart's law states that when a measure becomes a target, it ceases to be a good measure. In other words, if you pick a measure to assess performance, people find a way to game it.

To illustrate, I like the (probably apocryphal) story of a nail factory that sets "Number of nails produced" as its measure of productivity. The workers figure out they can easily make tons of tiny nails to hit the target.

Yet, when the frustrated managers switch the assessment to "weight of nails made", the workers again outfox them by making a few giant heavy nails.

And there's the story of measuring fitness by steps from a pedometer only to find the pedometer attached to the dog.

Some strategies for helping this are to try and find better, harder-to-game measures, assess with multiple measures, or allow a little discretion. More detail in this nice little article .

I also liked an idea I read in Measure What Matters of pairing a quantity measure with a quality measure, for example, assessing both the number of nails and customer satisfaction of the nails.

How strongly Goodhart's Law applies varies. John Cutler shared the Cutler Variation of Goodhart's Law :

"In environments with high psychological safety, trust, and an appreciation for complex sociotechnical systems, when a measure becomes a target, it can remain a good measure because missing the target is treated as a valuable signal for continuous improvement rather than failure."

Related Ideas to Goodhart's Law

Also see:

- The Law of Unintended Consequences

- The Cobra Effect

- Campbell's Law

- The Boaty McBoatface Effect

- The Streisand Effect