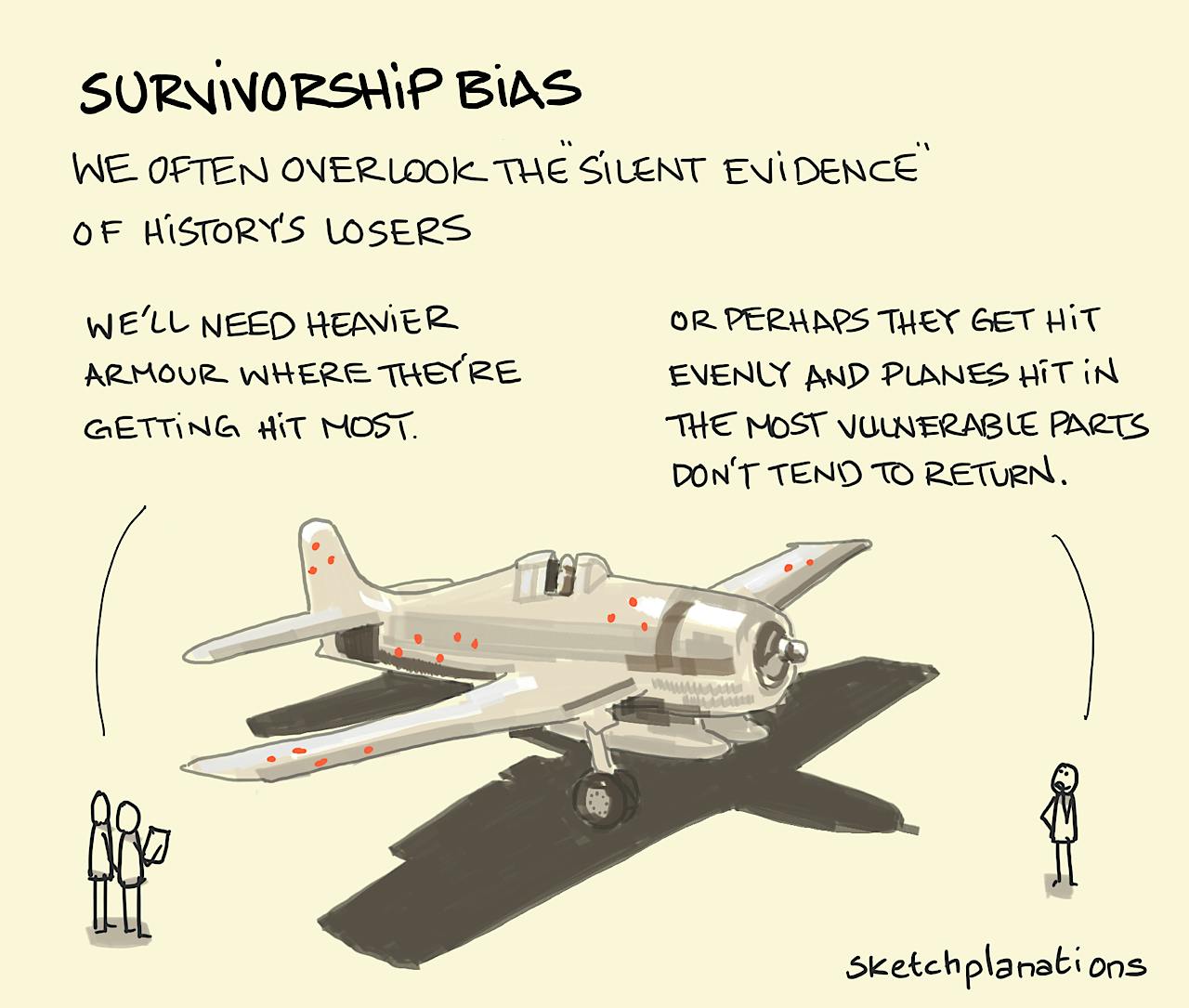

Survivorship bias

- Download

- Copied!

👇 Get new sketches each week

So the story goes, as told by Stephen Sigler, Nature May 1989:

"The US military studied fighter planes returning from missions to try to improve their survival rate and were considering adding heavy armour to those parts of the plane that tended to show the greatest concentration of hits from enemy fire, until statisticians pointed out the fallacy of that argument. The more vulnerable parts of the plane were those with the fewest hits; planes hit there tended not to return at all. The single most vulnerable part, the pilot’s head, was without serious scar in the sample of planes that returned."

It’s easy to draw correlations from what we see in front of us. But what we see usually represents just a small part of what has happened. Focusing on the evidence we can easily see at the expense of that we can’t leads to survivorship bias. To say it another way, when Bill Gates drops out of college and starts Microsoft it might seem like dropping out is a path to success for others too, but that ignores all the dropouts who didn’t create Microsofts and consequently you didn’t hear about.

Silent evidence — the evidence that we don’t or can’t easily choose to consider — is a term from Nicholas Nassim Talleb.

When trying to discover the veracity of the planes story I enjoyed Bill Casselman’s American Mathematical Society article on The Legend of Abraham Wald . The postscript points to some of the source behind the story including a mention of Stephen Sigler’s letter in Nature quoted above.

I covered survivorship bias before, but I like this story so much I thought it was worth doing again.